A Brief History of Random Numbers

“As an instrument for selecting at random, I have found nothing superior to dice,” wrote statistician Francis Galton in an 1890 issue of Nature. “When they are shaken and tossed in a basket, they hurtle so variously against one another and against the ribs of the basket-work that they tumble wildly about, and their positions at the outset afford no perceptible clue to what they will be even after a single good shake and toss.”

How can we generate a uniform sequence of random numbers? The randomness so beautifully and abundantly generated by nature has not always been easy for us humans to extract and quantify. The oldest known dice were discovered in a 24th century B.C. tomb in the Middle East. More recently, around 1100 B.C. in China, turtle shells were heated with a poker until they cracked at random, and a fortune teller would interpret the cracks. Centuries after that, I Ching hexagrams for fortunetelling were generated with 49 yarrow stalks laid out on a table and divided several times, with results similar to performing coin tosses.

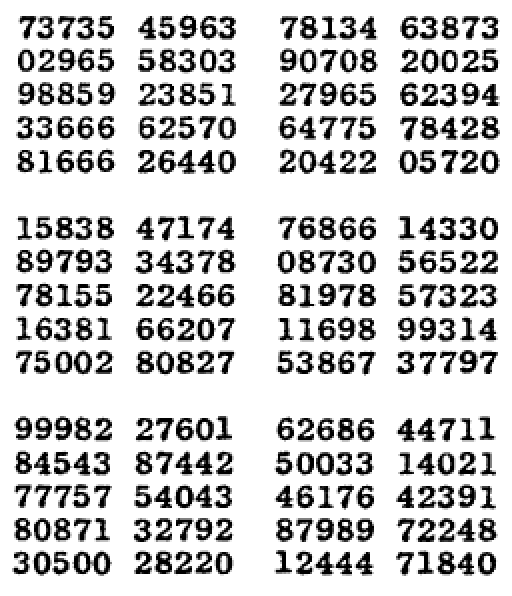

Excerpt from “A Million Random Digits with 100,000 Normal Deviates”

But by the mid-1940s, the modern world demanded a lot more random numbers than dice or yarrow stalks could offer. RAND Corporation created a machine that would generate numbers using a random pulse generator. They ran it for a while and gathered the results into a book titled A Million Random Digits with 100,000 Normal Deviates. What now might seem like an absurd art project was, back then, a breakthrough. For the first time, a nice long sequence of high-quality random numbers was made available to anyone who needed it: scientists, researchers, mathematicians. (The book was reprinted by RAND in 2001 and is available on Amazon.)

A similar machine, ERNIE, designed by the now-famous Bletchley Park WWII codebreaking team in the 1940s, was used to generate random numbers for the UK Premium Bond lottery. To quell fears about the fairness and accuracy of ERNIE, the Post Office made a great documentary in the early 1960s called The Importance of Being E.R.N.I.E.

In 1951, a random number generator was first added to a general-purpose computer, the Ferranti Mark 1. The Mark 1 shipped with a built-in random number instruction that could generate 20 random bits at a time, using electrical noise. The feature was designed by the grandfather of computing, Alan Turing. Christopher Strachey put it to good use by coding up an aleatory love note generator. Here’s an sample love note, from David Link’s 2009 resurrection of Strachey’s program:

JEWEL LOVE

MY LIKING HUNGERS FOR YOUR ADORABLE INFATUATION.

YOU ARE MY EROTIC ARDOUR.: MY FOND RAPTURE. MY THIRST

SIGHS FOR YOUR INFATUATION. MY HEART SEDUCTIVELY WISHES YOUR

BREATHLESS LONGING.

YOURS CURIOUSLY

M. U. C.

Turing’s random number instruction was maddening for programmers at the time because it created too much uncertainty in an environment that was already so unpredictable. We expect consistency from our software, but programs that used the instruction could never be run in any consistently repeatable way, which made them nearly impossible to test.

The idea of a pseudorandom number generator (PRNG) provided a possible path forward. A PRNG is a random number generator expressed as a deterministic math function. It can be called repeatedly to deliver a sequence of random numbers, but under the same initial conditions (if given the same initial “seed” value) it will always produce the same sequence.

John von Neumann developed a PRNG around 1946. His idea was to start with an initial random seed value, square it, and slice out the middle digits. If you repeatedly square the result and slice out the middle digits, you’ll have a sequence of numbers that exhibit the statistical properties of randomness.

Von Neumann’s approach didn’t stand the test of time, however, because no matter what seed value was used, the sequence would eventually fall into a short repeated cycle of numbers, like 8100, 6100, 4100, 8100, 6100, 4100, …

Avoiding cycles is impossible when you are working with a deterministic random function whose subsequent value is based on the previous value. But what if the period of the cycle is really, really big, such that it practically didn’t matter?

The mathematician D. H. Lehmer made good progress toward this idea in 1949 with a linear congruential generator (LCG). Here’s a naive PRNG called The Central Randomizer, based on Lehmer’s approach and written in JavaScript in 1995:

// The Central Randomizer 1.3 (C) 1997

// by Paul Houle (paul@honeylocust.com)

// See: http://www.honeylocust.com/javascript/randomizer.html

rnd.today=new Date();

rnd.seed=rnd.today.getTime();

function rnd() {

rnd.seed = (rnd.seed*9301+49297) % 233280;

return rnd.seed/(233280.0);

};

function rand(number) {

return Math.ceil(rnd()*number);

};

There are several magic numbers at play here. These numbers (usually primes) were selected to maximize the period — the number of iterations before the sequence generated by rand() would begin to repeat itself. This PRNG uses the current time as a seed value, and the period is at most 233280.

The Central Randomizer became popular because JavaScript 1.0 had no built-in Math.random() function, and everyone wanted their Web 1.0 banner ads to rotate at random. “I wouldn’t use it to keep secrets,” the author Paul Houle wrote, “but it’s good for many uses.”

The Internet did need to keep secrets, though. Transport layer encryption for HTTP connections (TLS/SSL) was developed around 1995, and its security depends on a high quality PRNG, especially on the web server side. This development may have led to a period of wild innovation in RNGs. If you look at all the patents from the mid-90s for novel random number generators, it feels like a more modern version of the attempts to build the first airplane.

The most common CPUs of the mid-1990s did not have an instruction for generating random numbers, so good seed values were hard to come by back then. This became a real security issue when Phillip Hallam-Baker discovered that Netscape’s SSL web server (one of the biggest on the market at the time) used a combination of the current time and a couple of process IDs to seed its random number generator. Hallam-Baker showed that an attacker could easily guess the seed value and decrypt as much of the server’s traffic as they could get their hands on. Guessing the seed value is a common attack, though it has become more sophisticated: here’s a beautiful walkthrough of a successful attack on Hacker News, from 2009.

By 1997, computer scientists were fed up with the limited options for generating truly random numbers, so a team at SGI created LavaRand, which was a webcam pointed at a couple of lava lamps on a desk. The image data from the camera was an excellent entropy source — a True Random Number Generator (TRNG) like Turing’s — and it could generate 165Kb/s of random data. In Silicon Valley fashion, the lava lamp rig was quickly patented.

A founder of Autodesk, John Walker, who was intent on spreading randomness around the world created HotBits, a Random Numbers-as-a-Service app backed by a geiger counter that guarantees true quantum randomness. Random.org, created in 1998, provides free Truly Random Numbers. They now offer mobile apps for truly random coin flipping, dice rolling, card shuffling, and so on.

Most of these inventions have fallen by the wayside, but one software PRNG, called The Mersenne Twister, developed in 1997 by Makoto Matsumoto (松本 眞) and Takuji Nishimura (西村 拓士) is a home run. It balances performance and quality beautifully, and it has stood the test of time. It’s based on the idea of an linear feedback shift register (LFSR), which produces a deterministic sequence with very long cycle periods. In current implementations, it has a period of 2¹⁹⁹³⁷− 1, and it’s still the default PRNG in many programming languages today.

Since then, several newer PRNGs have emerged for different applications, and some researchers feel it’s time to let go of the Mersenne Twister. Newer PRNG families include Xorshift (2003), Permuted Congruential Generator (PCG) (2014), and Xoroshiro128+ (2018, now the default for many web browser JavaScript engines). These all offer several variants for different use cases.

Meanwhile, on the hardware side of things, in 1999 Intel added an on-chip random number generator to its i810 server chipset. Finally, nearly 50 years after Turing first designed the Mark 1’s RNG, new machines had a good local source of randomness from thermal noise — a true random number generator (TRNG). While this was a big step, it was still not as fast as software PRNGs, so high-performance cryptograpy software still had to rely on a PRNGs that could now, at least, be regularly reseeded by the built-in TRNG instruction.

There’s a whole class of PRNGs that we still haven’t covered: the cryptographically secure PRNG (CSPRNG). (These acronyms! No wonder some people think computer science is boring.) The CSPRNG became very important for securing communication over TLS, WPA2 Wi-Fi connections, etc. What constitutes a CSPRNG? Here’s a 131-page paper detailing the statistical requirements. Have fun.

Needless to say, a CSPRNG has very strong requirements. The Mersenne Twister is not a CSPRNG because its values can be predicted if you have a big enough sample of previous values in the sequence. Some cryptographic stream ciphers such as ChaCha20 can be used as CSPRNGs.

More recently, in 2012, Intel added the RDRAND and RDSEED instructions for TRNG using an on-chip thermal noise generator that provides 500MB/s of throughput. AMD followed suit. So, most computers today finally have a decent TRNG, right?

Yes, sort of. The RDRAND instruction is surrounded by conspiracy theories about its integrity. Is there a subtle weakness in it, added specifically for the NSA and no one else? Nobody knows for sure. Well, I suppose somebody somewhere does know, but they sure aren’t Tweeting about it.

In addition to the TRNG available on most CPUs, there’s a TRNG in every Trusted Platform Module (TPM) security chip. These chips have nearly become ubiqitious, and the Linux kernel will use a TPM along with other hardware sources to help populate the kernel entropy pool.

Random data generated by REDOUBLER, a hardware random number generator.

Open source hardware TRNGs have emerged in recent years, too. The strength of these systems comes from the transparency of their design: You can inspect the circuit yourself, and you can build it at home using off-the-shelf components. Full transparency leaves no doubts about the awesome randomness of these circuits. REDOUBLER and Infinite Noise TRNG are two hardware random number generators with schematics and driver code on GitHub.

Today, there is still debate over which random number generation schemes to use in OS kernel software, programming languages, and security packages like OpenSSL or OpenSSH. There are many variants of these algorithms for different speed, space, and security requirements, and security experts are always looking for new ways to attack an implementation. But for most everyday uses, you can comfortably use the /dev/urandom special file on most operating systems, getrandom(0) in the Linux kernel, or the rand() function in most programming languages, to get a fast and endless stream of randomness that would have made Alan Turing very happy.

Sources:

The methods for choosing good magic numbers for LCGs are described thoroughly in The Art of Computer Programming vol. 2, chapter 3, by Donald Knuth.

Christopher Strachey’ Nineteen-Fifties Love Machine, The New Yorker

Linux i830 Random Number Generator documentation

Lavarand: Wikipedia, Wired Magazine article

Mersenne Twister: Wikipedia

Thermal noise: Wikipedia

Cloudflare wrote a good blog post in 2013 about why randomness matters

RDRAND: Wikipedia, Stack Overflow article on throughput